Infrastructure for AI: Why storage matters

Perhaps your organization has recently decided to purchase compute nodes and get started with artificial intelligence (AI). There are many aspects of your IT infrastructure and technology landscape to scrutinize as you prepare for AI workloads — including, and perhaps especially, your storage systems. AI is driven by data, and how your data is stored can significantly affect the outcome of your AI project. Not only that, but the four different stages of AI (ingest, preparation, training and inference) each have different storage needs and requirements.

Unfortunately, some organizations focus on the compute side of AI and overlook the storage side. This singular focus can, and sometimes does, lead to the disruption or complete failure of AI projects. Massive amounts of data are needed to facilitate the AI training stage. This data needs to be ingested, stored and prepared so it can be “fed” to the training stage. Without the ability to ingest, store and consume the necessary data for training, the project will be at risk of failure.

AI projects demand a storage infrastructure with excellent performance, scalability and flexibility. The good news is that today’s storage systems can be purpose-built to meet the needs of AI projects. Two great examples of this are some of the world’s most powerful supercomputers, Sierra and Summit.

Now, let’s look at some requirements.

Workload specifics and the movement of data

The requirements for each stage of the AI pipeline need to be reviewed for the expected workload of your AI application. Workloads vary, but some companies using large data sets can train for long periods of time. Once the training is done, that data is often moved off of critical storage platforms to prepare for a new workload. Managing data manually can be a challenge, so it’s wise to think ahead when considering how data is placed on storage and where it will go once training is done. Finding a platform that can move data automatically for you puts you one step closer to efficient and capable storage management for AI.

After reviewing the implications of your own workload needs, you can decide on the storage technologies that work best for your AI compute infrastructure and project.

Storage needs for different AI stages

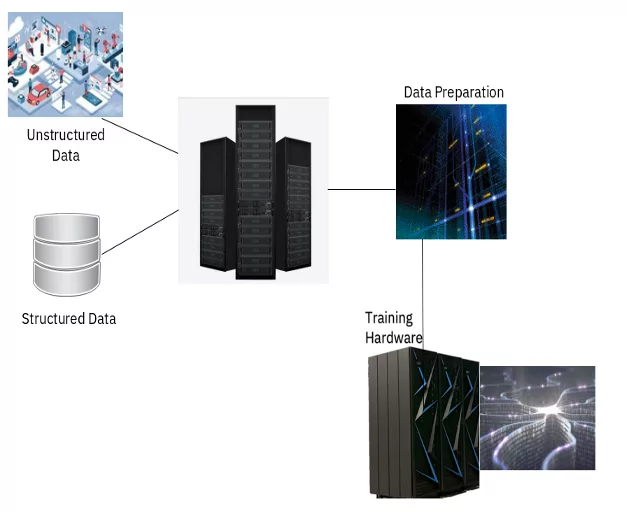

Data ingestion. The raw data for AI workloads can come from a variety of structured and unstructured data sources, and you need a very reliable place to store the data. The storage medium could be a high capacity data lake or a fast tier, like flash storage, especially for real-time analytics.

Data preparation. Once stored, the data must be prepared since it is in a “raw” format. The data needs to be processed and formatted for consumption by the remaining phases. File I/O performance is a very important consideration at this stage since you now have a mix of random reads and writes. Take the time to figure out what the performance needs are for your AI pipeline. Once the data is formatted, it will be fed into the neural networks for training.

Training and inferencing. These stages are very compute intensive and generally require streaming data into the training models. Training is an iterative process, requiring setting and resetting, which is used to create the models. Inferencing can be thought of as the sum of the data and training. The GPUs in the servers, and your storage infrastructure become very important here because of the need for low latency, high throughput and quick response times. Your storage networks need to be designed to handle these requirements as well as the data ingestion and preparation. At scale, this stresses many storage systems, especially ones not prepared for AI workloads, so it’s important to specifically consider whether your storage platform can handle the workload needs in line with your business objectives.

Don’t forget capability and flexibility

Also consider: Does your storage infrastructure scale easily? Can you expand the storage system as your data needs grow? These are very important questions that have a direct effect on your AI infrastructure requirements.

Make sure you can scale your storage infrastructure up and out with minimal to no disruption to your production operations, keeping pace with data growth in your business. Be flexible enough to consider different storage configurations for the different needs of the AI infrastructure.

Turn to the experts for advice

Careful planning, matching your AI server and modeling requirements to the storage infrastructure, will help you get the most from your investments and lead to success in your AI projects.

These recommendations are just a starting point. Always keep in mind that if you don’t have the expertise in your organization to design and implement the correct AI storage infrastructure, you should work with your vendor to assist and help prepare your storage systems for AI.

And if you have questions or are looking for support on planning and preparing for an AI project with IBM Storage, don’t hesitate to contact IBM Systems Lab Services.

The post Infrastructure for AI: Why storage matters appeared first on IBM IT Infrastructure Blog.